ImageNet Challenge 2012 Analysis

Detecting avocados to zucchinis: what have we done, and where are we going?

Olga Russakovsky,

Jia Deng,

Zhiheng Huang,

Alexander C. Berg,

Li Fei-Fei.

International Conference on Computer Vision (ICCV), 2013.

[pdf]

[supplement]

[slides pptx |

slides pdf]

[bibTex]

- If we take the top 562 synsets from ILSVRC 2012 (out of 1000 synsets) measured by localization difficulty, ILSVRC is comparable to PASCAL VOC in terms of chance performance of localization and object scale.

- ILSVRC is similar to PASCAL VOC with respect to the average number of object instances and the amount of clutter per image.

- For the classification challenge, the SuperVision algorithm outperforms the second place competitor ISI on nearly all categories

- For the detection challenge, SuperVision consistently outperforms the second place competitor Oxford VGG except when considering the set of 239 hardest categories with respect to localization difficulty.

Contents |

1. Visualization of ILSVRC Data

We visualize the variety of the ILSVRC 2012 data using the validation set images. The images are first rescaled to the canonical size of 300x300 pixels. For each resized image we generate a 300x300 heat map where the region occupied by the annotated object of interest has value 1 while the rest of the image has value 0. The first column shows the average heat map. The red overlaid box is the average of all the annotated ground truth bounding boxes (computed by averaging each of the 4 coordinates). The second column shows the average of all images in this category

switch, electric switch, electrical switch

Click here for all ILSVRC 2012 categories

2. Analysis of Dataset Difficulty: ILSVRC 2012 vs. PASCAL VOC 2012

We demonstrate that the ILSVRC is a challenging testbed for evaluating object detection algorithms. We compare it to the PASCAL VOC which has been accepted by the computer vision community as the benchmark detection dataset.

2.1 Variety of Object Classes

| PASCAL VOC | ILSVRC |

|

aeroplane      |

|

|

bicycle      |

|

|

bird      |

... |

|

boat      |

... |

|

bottle      |

... |

|

bus      |

|

|

car      |

... |

|

cat      |

... |

|

chair      |

|

|

cow      |

|

|

diningtable      |

|

|

dog      |

... |

|

horse      |

|

|

motorbike      |

|

|

person      |

|

|

pottedplant      |

|

|

sheep      |

... |

|

sofa      |

|

|

train      |

|

|

tvmonitor      |

|

|

Not present |

5 examples of ILSVRC categories which are not present in PASCAL.

Click here for all ILSVRC 2012 categories

... |

2.2 Chance Performance of Localization(CPL)

We define the chance performance of localization (CPL) measure as the expected accuracy of a detector which first randomly samples an object instance of that class and then uses its bounding box directly as the proposed localization window on all other images (after rescaling the images to the same size). Concretely, let B_1, B2, \ldots, B_N be all the bounding boxes of the object instances within a class, define the interesection over union(IOU) measure as

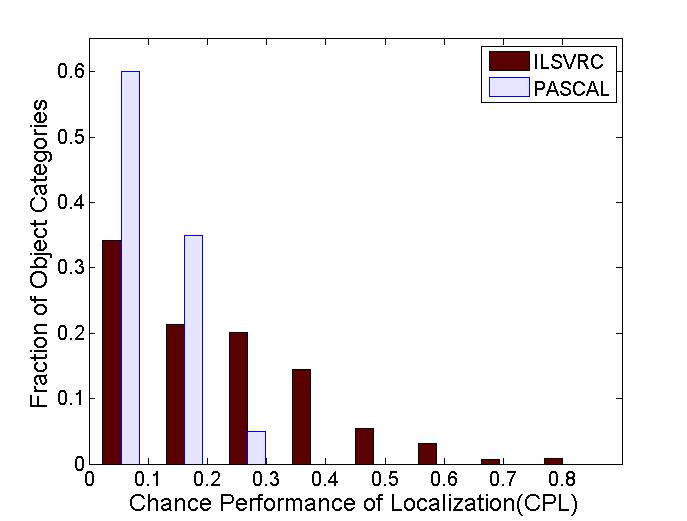

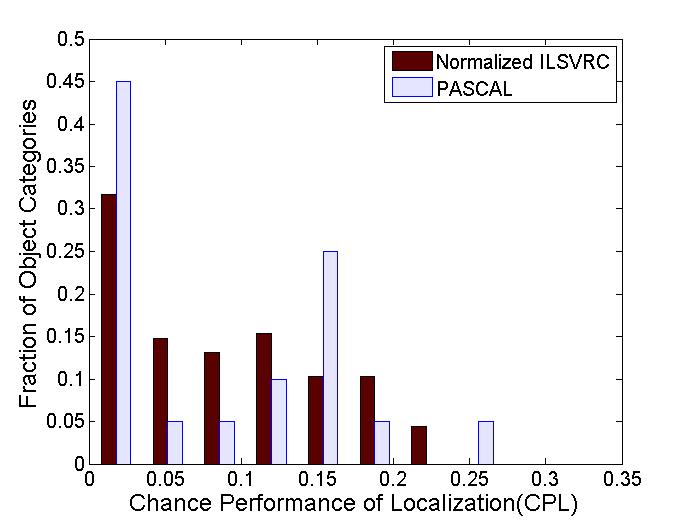

Figure 2.1 shows CPL distribution on ILSVRC and PASCAL detection datasets. The 20 categories of PASCAL have an average CPL of 8.8% on the validation set; average CPL for all 1000 categories of ILSVRC is 20.8%. If we only keep the 562 most difficult categories of ILSVRC, the average CPL will be the same as PASCAL(up to 0.02% precision). We will refer to this set of 562 categories as normalized ILSVRC dataset. Figure 2.2 compares the CPL distribution of normalized ILSVRC versus PASCAL.

| PASCAL VOC | ILSVRC |

|

bottle CPL=0.38%      |

basketball CPL=0.082%

|

|

pottedplant CPL=0.94%      |

swimming trunks, bathing trunks CPL=0.098%

|

|

sheep CPL=1.3%      |

ping-pong ball CPL=0.13%

|

|

chair CPL=1.5%      |

rubber eraser, rubber, pencil eraser CPL=0.13%

|

|

boat CPL=1.7%      |

nail CPL=0.19%

|

2.3 Average Object Scale

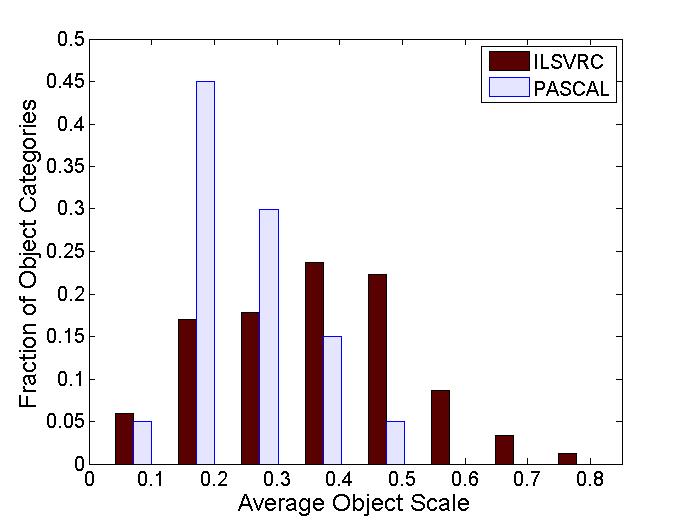

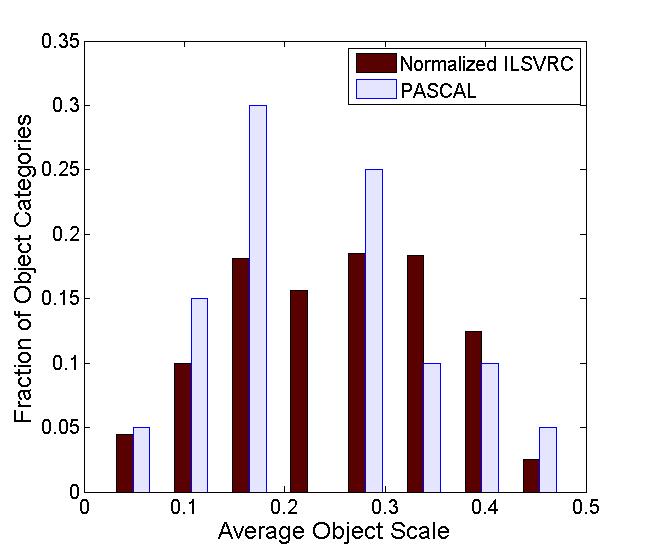

The object scale in an image is calculated as the ratio between the area of ground truth bounding box and area of the image. The average scale per class on the PASCAL dataset is 0.241, on the ILSVRC is 0.358 and on the normalized ILSVRC is 0.251. Figure 2.3 and Figure 2.4 show the distirbution of object scale on both ILSVRC and PASCAL dataset.

| PASCAL VOC | ILSVRC |

|

bottle scale=0.07      |

basketball scale=0.02

|

|

sheep scale=0.12      |

ping-pong ball scale=0.02

|

|

pottedplant scale=0.12      |

swimming trunks, bathing trunks scale=0.02

|

|

chair scale=0.13      |

pickelhaube scale=0.03

|

|

car scale=0.14      |

bearskin, busby, shako scale=0.03

|

2.4 Average Number of Instances

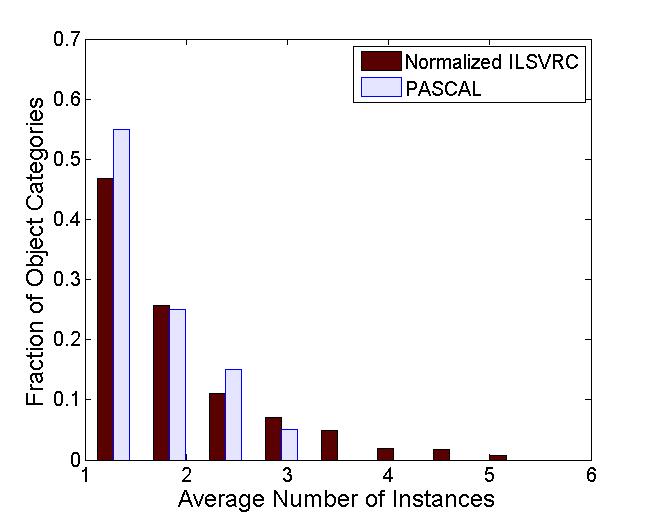

The number of instances in an image is calculated as the number of non-overlapping ground truth bounding boxes in that image. The average number of instances per class on the PASCAL dataset is 1.69, on the ILSVRC is 1.590 and on the normalized ILSVRC is 1.911. The histograms of average number of instances for ILSVRC and Pascal are shown in Figure 2.5 and Figure 2.6.

| PASCAL VOC | ILSVRC |

|

sheep instance number=3.1      |

strawberry instance number=5.4

|

|

person instance number=2.3      |

hay instance number=5.2

|

|

chair instance number=2.2      |

bubble instance number=5.2

|

|

cow instance number=2.2      |

pretzel instance number=5.1

|

|

bottle instance number=2.0      |

fig instance number=4.8

|

2.5 Level of Clutter

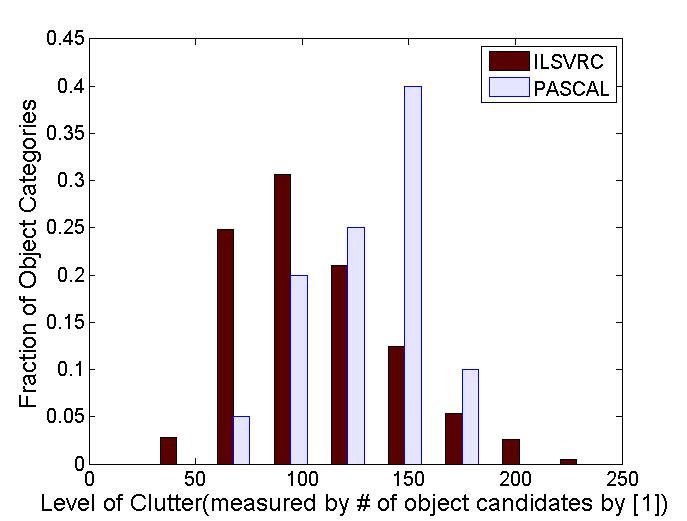

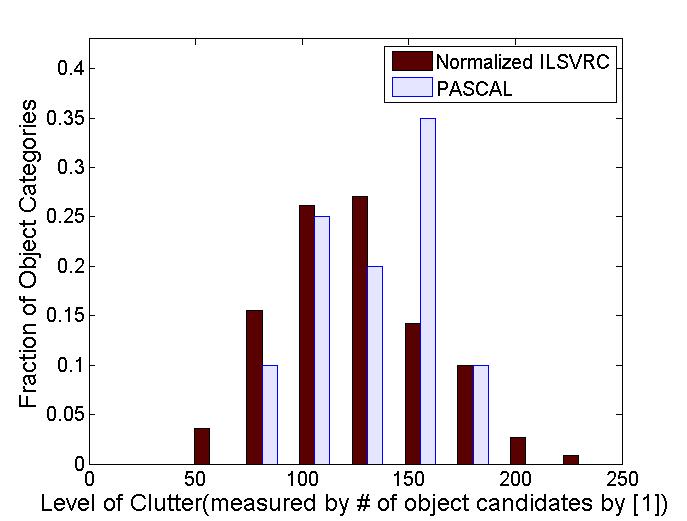

To capture the level of clutter of an image, we resize them to largest dimension of 300 pixels, use an unsupervised class-independent selective search[1] approach to generate candidate image regions likely to contain a coherent object, and then filter out all boxes which are entirely contained within an object instance or correctly localize it according to the IOU criterion. The remaining boxes are considered clutter. We use the number of clutter boxes as a measure of clutter level. The average level of clutter per class on the PASCAL dataset is 129.96, on the ILSVRC is 106.98 and on the normalized ILSVRC is 124.67. Figure 2.7 and 2.8 demonstrates that ILSVRC is comparable to the PASCAL object detection dataset on this metric.

* We have corrected an error in an earlier version of the analysis. Perviously the PASCAL average level of clutterness was given as 74.94; the normalized ILSVRC 107.15. We are sorry for any possible inconvenience.

| PASCAL VOC | ILSVRC |

|

pottedplant clutter=173.4      |

football helmet clutter=242.0

|

|

bottle clutter=170.5      |

organ, pipe organ clutter=236.5

|

|

car clutter=159.7      |

steel drum clutter=230.5

|

|

person clutter=156.5      |

umbrella clutter=224.0

|

|

chair clutter=156.0      |

bearskin, busby, shako clutter=218.6

|

[1] van de Sande, K. E. A. and Uijlings, J. R. R. and Gevers, T. and Smeulders, A. W. M. Segmentation As Selective Search for Object Recognition. ICCV 2011.

3. Analysis of ILSVRC2012 Results

3.1 Classification Challenge

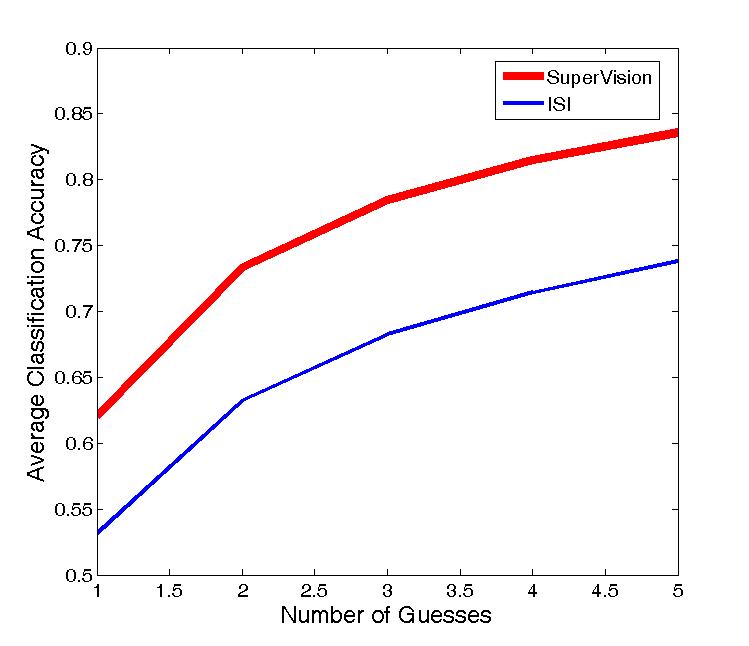

For the ILSVRC2012 classification challenge, each object class C has a set of images associated with it. Given an image, an algorithm is allowed to predict up to 5 object classes (since additional unannotated objects may be present in these images). An image is considered correctly classified if one of these guesses correctly matches the object class C. Classification accuracy of an algorithm on class C is the fraction of correctly classified images.

We compare the performance of the top 2 algorithms on the ILSVRC2012 classification challenge, namely SuperVision and ISI. Figure 3.1 shows how the average classification accuracy varies according to the number of guesses allowed. SuperVision consistently outperforms ISI.

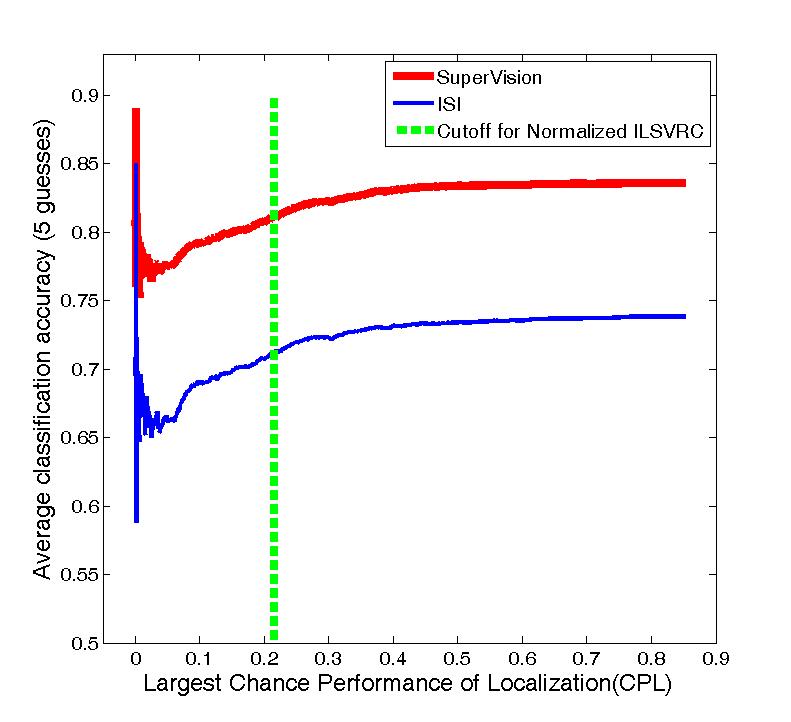

Figure 3.2 shows the cumulative classification accuracy across the categories sorted from hardest to easiest according to CPL. The vertical line is the cutoff for the normalized ILSVRC. SuperVision consistently outperform ISI.

Categories with Highest Classification Accuracy (5 guesses)

| SuperVision | ISI |

Leonberg accuracy 1.0

| canoe accuracy 1.0

|

|

| geyser accuracy 1.0

|

daisy accuracy 1.0

| odometer, hodometer, mileometer, milometer accuracy 1.0

|

yellow lady's slipper, yellow lady-slipper, Cypripedium calceolus, Cypripedium parviflorum accuracy 1.0

| gondola accuracy 0.99

|

geyser accuracy 1.0

| electric locomotive accuracy 0.99

|

Categories with Lowest Classification Accuracy (5 guesses)

| SuperVision | ISI |

ladle accuracy 0.31

| spatula accuracy 0.08

|

muzzle accuracy 0.33

| ladle accuracy 0.11

|

velvet accuracy 0.35

| hatchet accuracy 0.13

|

hook, claw accuracy 0.36

| screwdriver accuracy 0.17

|

hatchet accuracy 0.36

| cleaver, meat cleaver, chopper accuracy 0.17

|

Categories where SuperVision and ISI Differ Most in Classification Accuracy(5 guesses)

| SuperVision Outperformed ISI | ISI Outperformed SuperVision |

weasel win by 0.52

| fountain win by 0.06

|

Great Dane win by 0.39

| jigsaw puzzle win by 0.06

|

hog, pig, grunter, squealer, Sus scrofa win by 0.39

| chainlink fence win by 0.06

|

reel win by 0.36

| sea slug, nudibranch win by 0.05

|

spatula win by 0.36

| megalith, megalithic structure win by 0.05

|

3.2 Detection Challenge

For the ILSVRC2012 detection challenge, each object class C has a set of images associated with it, and each image is human annotated with bounding boxes B_1, B_2, \ldots indicating the location of all instances of this object class. Given an image, an algorithm is allowed to predict up to 5 annotations, each consisting of an object class ci and a bounding box b_i. The object is considered correctly detected if for some proposed annotation (c_i, b_i), it is the case that c_i = C and b_i correctly localizes one of the object instances B_1, B_2, \ldots according to the standard intersectin over union >= 0.5 criteria. Detection accuracy of an algorithm on class C is the fraction of images where the object is correctly detected.

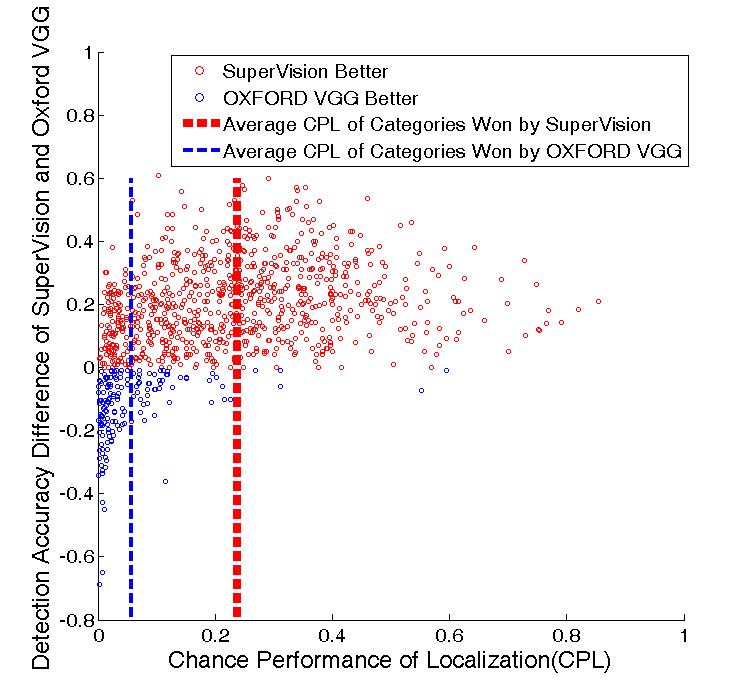

We compare the performance of the top 2 algorithms on the ILSVRC2012 detection challenge, namely SuperVision and Oxford VGG. Figure 3.3 shows the difference of SuperVision and Oxford VGG detection accuracy for each category. SuperVision outperform Oxford VGG on the 825 of the 1000 categories. The categories on which Oxford VGG outperforms SuperVision tend to be the more difficult ones according to CPL, with an average CPL of 0.057.

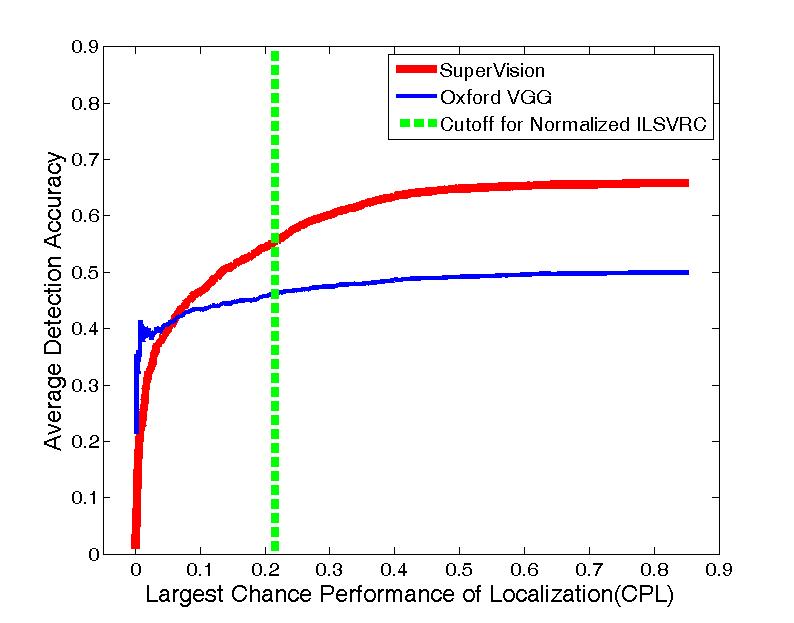

Figure 3.4 shows the cumulative detection accuracy across the categories sorted from hardest to easiest according to CPL. The vertical line is the cutoff for the normalized ILSVRC. SuperVision consistently outperforms Oxford VGG except when considering the set of 239 hardest categories with respect to CPL.

Categories with Highest Detection Accuracy

| SuperVision | Oxford VGG |

convertible accuracy 0.99

| web site, website, internet site, site accuracy 0.97

|

Leonberg accuracy 0.98

| daisy accuracy 0.94

|

leopard, Panthera pardus accuracy 0.96

| Leonberg accuracy 0.93

|

Tibetan mastiff accuracy 0.96

| car mirror accuracy 0.92

|

|

| Model T accuracy 0.91

|

Categories with Lowest Detection Accuracy

| SuperVision | Oxford VGG |

volleyball accuracy 0.01

| sea snake accuracy 0.01

|

basketball accuracy 0.01

| spider monkey, Ateles geoffroyi accuracy 0.02

|

ping-pong ball accuracy 0.02

| hook, claw accuracy 0.02

|

rugby ball accuracy 0.03

| wooden spoon accuracy 0.03

|

croquet ball accuracy 0.04

| water snake accuracy 0.04

|

Categories Where SuperVision and Oxford VGG Differ Most in Detection Accuracy

| SuperVision Outperformed Oxford VGG | Oxford VGG Outperformed SueprVision |

Irish terrier win by 0.67

| bearskin, busby, shako win by 0.69

|

|

| volleyball win by 0.65

|

weasel win by 0.6

| rugby ball win by 0.45

|

otter win by 0.58

| steel drum win by 0.43

|

whiptail, whiptail lizard win by 0.57

| microwave, microwave oven win by 0.36

|