ImageNet Large Scale Visual Recognition Challenge 2014 (ILSVRC2014)

News

- June 2, 2015: Additional announcement regarding submission server policy is released.

- May 19, 2015: Announcement regarding submission server policy is released.

- December 17, 2014: ILSVRC 2015 is announced.

- September 2, 2014: A new paper which describes the collection of the ImageNet Large Scale Visual Recognition Challenge dataset, analyzes the results of the past five years of the challenge, and even compares current computer accuracy with human accuracy is now available. Please cite it, along with the original ImageNet paper, when reporting ILSVRC2014 results or using the dataset.

- August 18, 2014: Check out the New York Times article about ILSVRC2014.

- August 18, 2014: Results are released.

- August 18, 2014: Test server is open.

- July 25, 2014: Submission server is now open.

- July 15, 2014: Computational resources available, courtesy of NVIDIA.

- July 3, 2014: Please note that the August 15th deadline is firm this year and will not be extended.

- June 25, 2014: You can now browse all annotated detection images.

- May 3, 2014: ILSVRC2014 development kit and data are available. Please register to obtain the download links.

- April 8, 2014: Registration for ILSVRC2014 is open. Please register your team.

- January 19, 2014: Preparations for ImageNet Large Scale Visual Recognition Challenge 2014 (ILSVRC2014) are underway. Stay tuned!

- A PASCAL-style detection challenge on fully labeled data for 200 categories of objects, and

- An image classification plus object localization challenge with 1000 categories.

-

NEW: This year all participants are encouraged to submit object localization results; in past challenges, submissions to classification and classification with localization tasks were accepted separately.

Dataset 1: Detection

As in ILSVRC2013 there will be object detection task similar in style to PASCAL VOC Challenge. There are 200 basic-level categories for this task which are fully annotated on the test data, i.e. bounding boxes for all categories in the image have been labeled. The categories were carefully chosen considering different factors such as object scale, level of image clutterness, average number of object instance, and several others. Some of the test images will contain none of the 200 categories.

NEW: The training set of the detection dataset will be significantly expanded this year compared to ILSVRC2013. 60658 new images have been collected from Flickr using scene-level queries. These images were fully annotated with the 200 object categories, yielding 132953 new bounding box annotations.

Comparative scale

| PASCAL VOC 2012 | ILSVRC 2014 | ||

| Number of object classes | 20 | 200 | |

| Training | Num images | 5717 | 456567 |

| Num objects | 13609 | 478807 | |

| Validation | Num images | 5823 | 20121 |

| Num objects | 13841 | 55502 | |

| Testing | Num images | 10991 | 40152 |

| Num objects | --- | --- | |

Comparative statistics (on validation set)

| PASCAL VOC 2012 | ILSVRC 2013 | |

| Average image resolution | 469x387 pixels | 482x415 pixels |

| Average object classes per image | 1.521 | 1.534 |

| Average object instances per image | 2.711 | 2.758 |

| Average object scale (bounding box area as fraction of image area) |

0.207 | 0.170 |

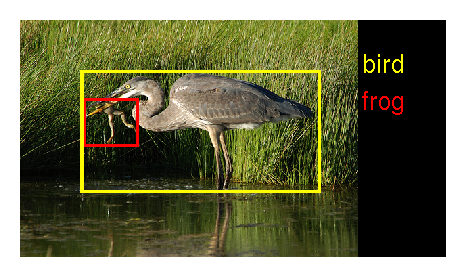

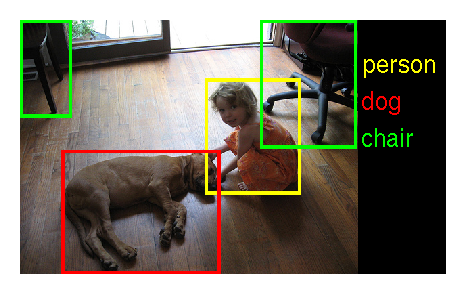

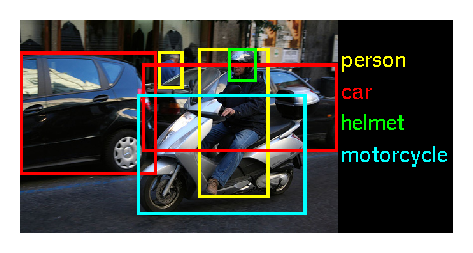

Example ILSVRC2014 images:

|

|

|

|

Dataset 2: Classification and localization

The data for the classification and localization tasks will remain unchanged from ILSVRC 2012 and ILSVRC 2013 . The validation and test data will consist of 150,000 photographs, collected from flickr and other search engines, hand labeled with the presence or absence of 1000 object categories. The 1000 object categories contain both internal nodes and leaf nodes of ImageNet, but do not overlap with each other. A random subset of 50,000 of the images with labels will be released as validation data included in the development kit along with a list of the 1000 categories. The remaining images will be used for evaluation and will be released without labels at test time.

The training data, the subset of ImageNet containing the 1000 categories and 1.2 million images, will be packaged for easy downloading. The validation and test data for this competition are not contained in the ImageNet training data.

Task 1: Detection

For each image, algorithms will produce a set of annotations $(c_i, b_i, s_i)$ of class labels $c_i$, bounding boxes $b_i$ and confidence scores $s_i$. This set is expected to contain each instance of each of the 200 object categories. Objects which were not annotated will be penalized, as will be duplicate detections (two annotations for the same object instance). The winner of the detection challenge will be the team which achieves first place accuracy on the most object categories.

Task 2: Classification and localization

In this task, given an image an algorithm will produce 5 class labels $c_i, i=1,\dots 5$ in decreasing order of confidence and 5 bounding boxes $b_i, i=1,\dots 5$, one for each class label. The quality of a labeling will be evaluated based on the label that best matches the ground truth label for the image. The idea is to allow an algorithm to identify multiple objects in an image and not be penalized if one of the objects identified was in fact present, but not included in the ground truth.

The ground truth labels for the image are $C_k, k=1,\dots n$ with $n$ class labels. For each ground truth class label $C_k$, the ground truth bounding boxes are $B_{km},m=1\dots M_k$, where $M_k$ is the number of instances of the $k^\text{th}$ object in the current image.

Let $d(c_i,C_k) = 0$ if $c_i = C_k$ and 1 otherwise. Let $f(b_i,B_k) = 0$ if $b_i$ and $B_k$ have more than $50\%$ overlap, and 1 otherwise. The error of the algorithm on an individual image will be computed using two metrics:

- Classification-only: \[ e= \frac{1}{n} \cdot \sum_k \min_i d(c_i,C_k) \]

- Classification-with-localization: \[ e=\frac{1}{n} \cdot \sum_k min_{i} min_{m} max \{d(c_i,C_k), f(b_i,B_{km}) \} \]

1. Are challenge participants required to reveal all details of their methods?

Entires to ILSVRC2014 can be either "open" or "closed." Teams submitting "open" entries will be expected to reveal most details of their method (special exceptions may be made for pending publications). Teams may choose to submit a "closed" entry, and are then not required to provide any details beyond an abstract. The motivation for introducing this division is to allow greater participation from industrial teams that may be unable to reveal algorithmic details while also allocating more time at the ECCV14 workshop to teams that are able to give more detailed presentations. Participants are strongly encouraged to submit "open" entires if possible.

2. Can additional images or annotations be used in the competition?

Entires submitted to ILSVRC2014 will be divided into two tracks: "provided data" track (entries using only ILSVRC2014 images and annotations provided for each task), and "external data" track (entries using any outside images or annotations). Any team that is unsure which track their entry belongs to should contact the organizers ASAP. Additional clarifications will be posted here as needed.

- Entries which use Caffe or OverFeat features pre-trained on ILSVRC2012-2014 classification data belong in the "external data" track of Task 1 (detection), and in the "provided data" track of Task 2 (classification with localization).

3. Do teams have to submit both classification and localization results in order to participate in Task 2?

Teams have to submit results in the classification with localization format (meaning both class labels and bounding boxes). If they choose to do so, they can return the full image as their guess for the object bounding box. The hope is that teams will make an effort to use at least simple heuristics to localize the objects, but of course they are not required to.

4. Will submissions to Task 2 be ranked according to classification-only error as well as classification with localization error?

Teams will be ranked according to both metrics, and both sets of results will be reported on the website. The choice of speakers at the ECCV workshop, however, will be biased towards teams which participate in the full classification with localization task.

5. How many entries can each team submit per competition?

Participants who have investigated several algorithms may submit one result per algorithm (up to 5 algorithms). Changes in algorithm parameters do not constitute a different algorithm (following the procedure used in PASCAL VOC).

NVIDIA has generously offered challenge participants access to either high-end GPUs or to their online GPU cluster. If you are interested in taking advantage of these resources, please contact Stephen Jones () via email with the subject “ILSVRC2014”. A brief description of your entry and how you’re utilizing GPUs would be appreciated, but not required.

When requesting access, please mention the NVIDIA code found on the ImageNet download page. You will need to sign the ImageNet agreement first.

-

Please be sure to consult the included readme.txt file for competition details. Additionally, the development kit includes

- Overview and statistics of the data.

- Meta data for the competition categories.

- Matlab routines for evaluating submissions.

- May 3, 2014: Development kit, data and evaluation software made available.

- August 15, 2014: Submission deadline. (firm)

- September 12, 2014: ImageNet Large Scale Visual Recognition Challenge 2014 workshop at ECCV 2014. Challenge participants with the most successful and innovative methods will be invited to present.

- Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, Li Fei-Fei. Imagenet: A Large-Scale Hierarchical Image Database. CVPR 2009.

bibtex

-

Olga Russakovsky*, Jia Deng*, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg and Li Fei-Fei. (* = equal contribution) ImageNet Large Scale Visual Recognition Challenge. IJCV, 2015.

paper |

bibtex |

paper content on arxiv

- Olga Russakovsky ( Stanford University )

- Sean Ma ( Stanford University )

- Jonathan Krause ( Stanford University )

- Jia Deng ( University of Michigan )

- Alex Berg ( UNC Chapel Hill )

- Fei-Fei Li ( Stanford University )